No. This is not an April Fools Post. I mean, it is, but it’s not.

Let’s create some simple examples of using AI Agent Frameworks. In fact, these examples are so simple they fit into the first category of agent use: Basic Responder.

There. Are. So. Many. Available.

I have chosen four (from easiest to not-so-easy):

So I want to use local LLMs. There are a few ways run LLMs on your box, but truth be told there are a few leaders out there and I am too lazy to use more than one of them so…LM Studio it is. My URL is http://192.168.something.something:1234 (for some reason it changed over time). Yours will be different. In fact, you might be able to use the hostname. Sadly, for me, it means updating certain files, and I just can’t get myself to care. I use the local IP address listed in LM Studio when I run its server. Whatever works for you. The cat has no opinion.

Due purely to my innate paranoia I have created Docker containers for each of these environments. This approach allows me to share a local volume to securely store any code created. I don’t have to worry about polluting my local environment with various frameworks and their dependencies.

Seriously.

I choose my own poison (BTW, all the above files end with .txt as WordPress seems to have an allergy to certain file types. Rename as appropriate).

I will also admit that I used Docker Desktop on Windows 11 and not on Kubuntu 23.10 (which I should have updated about a year ago. Long story).

Ground Rules

Point #1: the goal of this post is show you how to use a local LLM running on your network. I am using LM Studio. You should too. However, I understand if you prefer something like Ollama. Also, I typically use Qwen2.5 Coder 14B Instruct (the GGUF version). It is not the latest, but it is fast and gives pretty good answers.

Point #2: select some local spot on your file system where you can store the files created in this post. You’ll thank me later.

Goose: No Code

But some configuration.

Save yourself the headache of configuring Goose on your local box. The dockerfile above should create what you need. It will open on a command line as soon as you docker run.

Create the Image

So, create the Goose container using the Dockerfile.goose file. In the command line below, I named the image created from the dockerfile i-goose. The container, when we run it, will be named c-goose (get it?). As mentioned above, I did all this on a Windows box (I know! I know!).

docker build -f Dockerfile.goose -t i-goose .Start the Container

The first time you run it you will have to use the docker run command to start it.

docker run -it --name c-goose -v ./mount-this/goose:/app i-gooseThe container command prompt should appear as soon as you run the above.

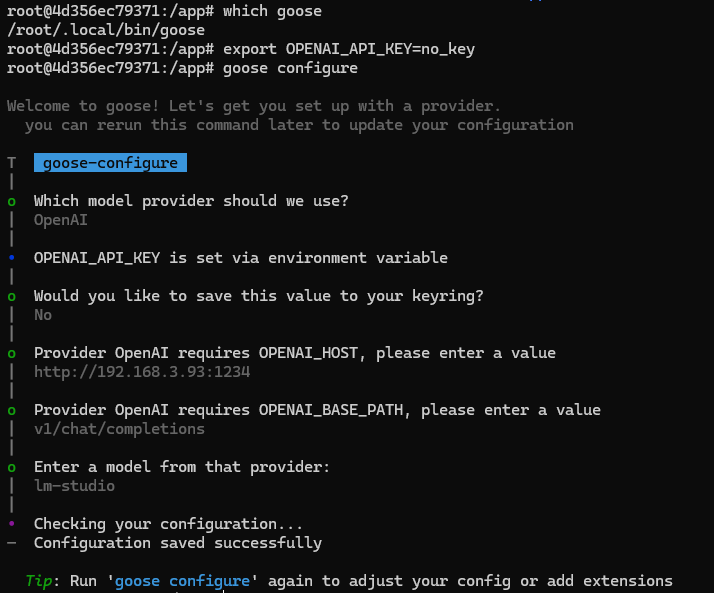

To set-up a local LLM with the least pain using Docker running on Windows you do the following within your Goose container:

- Set an environment variable for your non-existent OpenAI key (you don’t need one when using a local LLM):

export OPENAI_API_KEY=no_key- Start Goose with a command line option of configure:

goose configureTo configure Goose to work with LM Studio enter the information as in the screen capture:

- Which model provider should we use? OpenAI (cursor down to the OpenAI choice and press Enter)

- Would you like to save this value to your keyring? No

- Provider OpenAI requires OPENAI_HOST, please enter a value [the URL to your LM Studio server]

- Provider OpenAI requires OPENAI_BASE_PATH, please enter a value [the default is perfect for LM Studio so just press Enter]

- Enter a model from that provider: [whatever name you like. If you are going to use multiple models then you want to enter the real name of the model to be used]

Don’t bother saving your model info into the keyring. In fact, it will fail if you try.

Execute the Agent Code

Run LM Studio. Select a model (doesn’t really matter which model as long as it doesn’t blow up your machine executing a simple prompt.). Start the server.

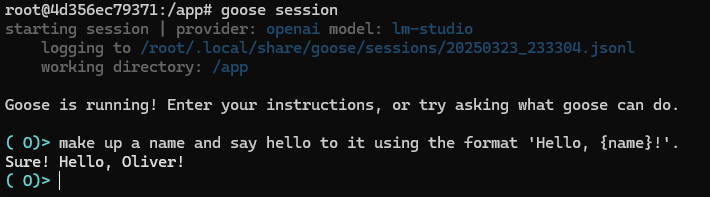

Run Goose using the session command line option.

goose session

Woo hoo! The cat is ecstatic. We got Goose to say hi!

(If you took a peek at your LM Studio server you would have seen the model responding to the Goose agent)

You must admit, from start to finish that was pretty quick…even for a goose.

You can continue to ask Goose questions like you would to ChatGPT, or Claude, or Perplexity. However, until we add the ability to use tools, its output will be restricted to whatever the LLM knows. We’ll look at adding tools in the next post.

When you exit the container that will also stop the container. To restart it use the docker start command (the -ai will reconnect to the bash shell).

docker start -ai c-gooseSmolAgents: Code

Save yourself the headache of configuring SmolAgents on your local box. The dockerfile above should create what you need. It will open on a command line as soon as you docker run (I’m having a sense of déjà vu).

Create the Image

Create the SmolAgent container using the Dockerfile.smolagents file. In the command line below, I named the image created from the dockerfile i-smolagents. The container, when we run it, will be named c-smolagents (do you sense a pattern in my naming conventions?)

docker build -f Dockerfile.smolagents -t i-smolagents .This could take a while. The installation of smolagents[all] can take a bit.

Start the Container

The first time you run it you will have to use the docker run command to start it.

docker run -it --name c-smolagents -v "$(pwd)/mount-this/smolagents:/workspace" i-smolagentsNotice, just like the Goose container, there is no external port assigned. We don’t need it yet.

The container command prompt should appear as soon as you run the above.

Execute the Agent Code

Run LM Studio and select a model. Doesn’t really matter which as long as it doesn’t blow up your machine executing a simple prompt.

Create a file, name it hello_world_agent.py, and enter the following code into it.

import os

from smolagents import CodeAgent, OpenAIServerModel

# Set the API base URL and API key

api_base = "http://192.168.3.93:1234/v1"

api_key = "no_key"

# Initialize the model

model = OpenAIServerModel(

model_id="lmstudio", # Replace with your specific model ID if different

api_base=api_base,

api_key=api_key,

)

# Create the agent with the model

agent = CodeAgent(tools=[], model=model)

# Define the task

task = "make up a name and say hello to it using the format 'Hello, {name}!'."

# Run the agent with the task

agent.run(task)

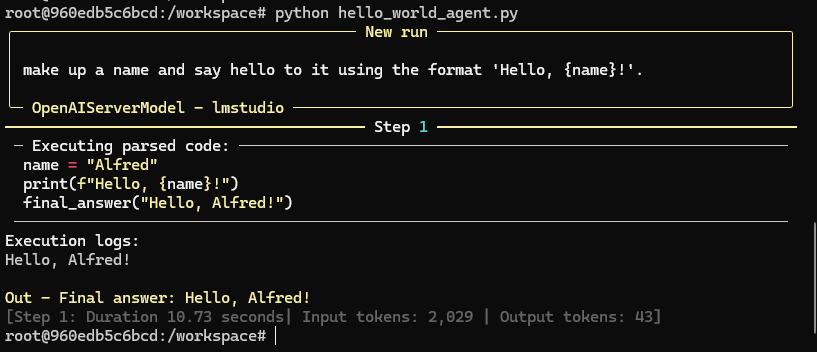

Note how little code there is:

- Define the LM Studio server URL

- Define a dummy API key/token

- Create the SmolAgent CodeAgent

- Run the agent with our prompt

Execute the following from the container command prompt.

python hello_world_agent.py(If you took a peek at your LM Studio server you would have seen the model responding to the CodeAgent. Yes, that is actual code. Python code. We’ll take advantage of that in a future post)

As before. when you exit the container that will also stop the container. To restart it use the docker start command (the -ai will reconnect to the bash shell).

docker start -ai c-smolagentsIn some ways, this was easier than using Goose. The fundamentals were the same: configure the agent with the proper model and go to town.

The cat is now quite amused.

AutoGen: Code

Save yourself the headache of configuring AutoGen on your local box. The dockerfile above should create what you need. It will open on a command line as soon as you docker run (again, déjà vu).

Create the Image

Create the AutoGen container using the Dockerfile.autogen file. In the command line below, I named the image created from the dockerfile i-autogen. The container, when we run it, will be named c-autogen (do you sense a pattern in my naming conventions?).

docker build -f Dockerfile.autogen -t i-autogen .Start the Container

The first time you run it you will have to use the docker run command to start it.

docker run -it --name c-autogen -v "$(pwd)/mount-this/autogen:/workspace" i-autogenNotice, just like the Goose container, there is no external port assigned. We don’t need it yet.

The container command prompt should appear as soon as you run the above.

Execute the Agent Code

Run LM Studio and select a model. Doesn’t really matter which as long as it doesn’t blow up your machine executing a simple prompt.

Create a file, name it hello_world_autogen.py, and enter the following code into it.

import autogen

# Configure the local model through LM Studio's OpenAI-compatible API

config_list = [

{

"model": "local-model",

"base_url": "http://localhost:1234/v1", # Default LM Studio local server URL

"api_key": "lm-studio" # Placeholder API key for local server

}

]

# Create the simplest possible assistant agent

assistant = autogen.AssistantAgent(

name="assistant",

llm_config={

"config_list": config_list,

"temperature": 0.7,

}

)

# Create a user proxy agent to initiate the conversation

user_proxy = autogen.UserProxyAgent(

name="user_proxy",

human_input_mode="NEVER", # Automatic mode

max_consecutive_auto_reply=1

)

# Send a simple prompt

user_proxy.initiate_chat(

assistant,

message="make up a name and say hello to it using the format 'Hello, {name}!'."

)Note how little code there is:

- Define the LM Studio server URL

- Define a dummy API key/token

- Create the Autogen

AssistantAgent - Create the Autogen

UserProxyAgent(not really needed in this case, but it is good form to use it) - Run the agent with our prompt

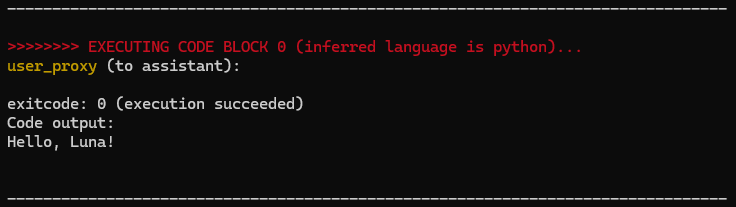

Execute the following from the container command prompt.

python hello_world_autogen.py(If you took a peek at your LM Studio server you would have seen the model responding to the AssistantAgent. And yes, once again the agent generated actual code. Python code. We’ll take advantage of that in a future post)

There was a lot of output. We only care about the stuff in the middle.

Woo hoo! Hello, Luna!

As before, when you exit the container that will also stop the container. To restart it use the docker start command (the -ai will reconnect to the bash shell).

docker start -ai c-autogenI hope you are noticing the similarities in the code. The frameworks are not that different from that perspective…but they are very different. We’ll see how much in a future post.

The cat was not impressed.

LangChain: Code

Create the Image

Create the LangGraph container using the Dockerfile.langgraph file. In the command line below, I named the image created from the dockerfile i-langgraph. The container, when we run it, will be named c-.langgraph

docker build -f Dockerfile.langgraph -t i-langgraph .Start the Container

The first time you run it you will have to use the docker run command to start it.

docker run -it --name c-langgraph -v "$(pwd)/mount-this/langgraph:/workspace" i-langgraphNotice, just like the Goose container, there is no external port assigned. We don’t need it yet.

The container command prompt should appear as soon as you run the above.

Execute the Agent Code

Run LM Studio and select a model. Doesn’t really matter which as long as it doesn’t blow up your machine executing a simple prompt.

Create a file, name it hello_world_langchain.py, and enter the following code into it.

from langchain_openai import ChatOpenAI

langChainAgent= ChatOpenAI(

base_url="http://192.168.1.229:1234/v1",

api_key="lm-studio"

)

response = langChainAgent.invoke("make up a name and say hello using the format 'Hello, {{name}}!'.")

greeting = response.content.strip()

print(greeting)So. Little. Code.

What code there is looks like:

- Create a ChatOpenAI agent

- Define the LM Studio server URL

- Define a dummy API key/token

- Give the agent our prompt

- Run the agent and print the response

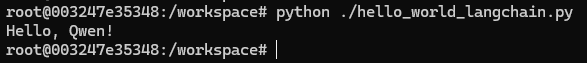

Execute the following from the container command prompt.

python hello_world_langchain.py(If you took a peek at your LM Studio server you would have seen the model responding to the langChainAgent)

Woo hoo! Hello, Qwen (is that a coincidence? Or did the LLM not make up a name?)!

As before, when you exit the container that will also stop the container. To restart it use the docker start command (the -ai will reconnect to the bash shell).

docker start -ai c-langgraphAgain, note the similarities in the code. The frameworks are not that different from that perspective…but they are very different.

Final Thoughts

OMG! Was this not easy enough for you?

That was kinda the point. Who’s afraid of the big ugly AI Agent frameworks? Not you! Not anymore!

Any questions? Ask below!